HA Usage Example

This section describes how to get started with the HA cluster and add an instance. If you are not familiar with HA installation, see Installing and Deploying HA.

- HA Usage Example

Quick Start Guide

- The following operations use the management platform newly developed by the community as an example.

Login Page

The user name is hacluster, and the password is the one set on the host by the user.

Home Page

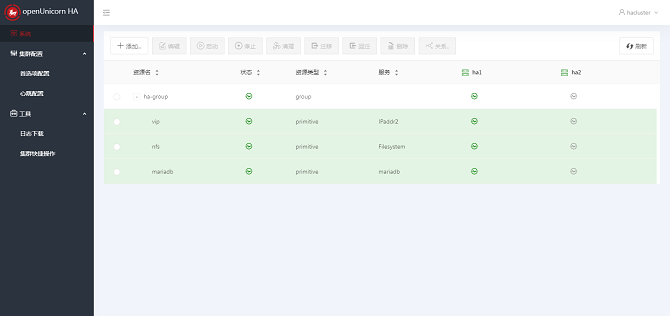

After logging in to the system, the main page is displayed. The main page consists of the side navigation bar, the top operation area, the resource node list area, and the node operation floating area.

The following describes the features and usage of the four areas in detail.

Navigation Bar

The side navigation bar consists of two parts: the name and logo of the HA cluster software, and the system navigation. The system navigation consists of three parts: System, Cluster Configurations, and Tools. System is the default option and the corresponding item to the home page. It displays the information and operation entries of all resources in the system. Preference Settings and Heartbeat Configurations are under Cluster Configurations. Log Download and Quick Cluster Operation are under Tools. These two items are displayed in a pop-up box after you click them.

Top Operation Area

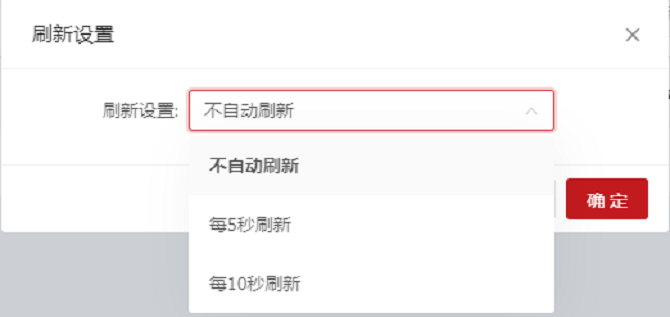

The current login user is displayed statically. When you hover the mouse cursor on the user icon, the operation menu items are displayed, including Refresh Settings and Log Out. After you click Refresh Settings, the Refresh Settings dialog box is displayed with the option. You can set the automatic refresh modes for the system. The options are Do not refresh automatically, Refresh every 5 seconds, and Refresh every 10 seconds. By default, Do not refresh automatically is selected. Clicking Log Out to log out and jump to the login page. After that, a re-login is required if you want to continue to access the system.

Resource Node List Area

The resource node list displays the resource information such as Resource Name, Status, Resource Type, Service, and Running Node of all resources in the system, and the node information such as all nodes in the system and the running status of the nodes. In addition, you can Add, Edit, Start, Stop, Clear, Migrate, Migrate Back, and Delete the resources, and set Relationships for the resources.

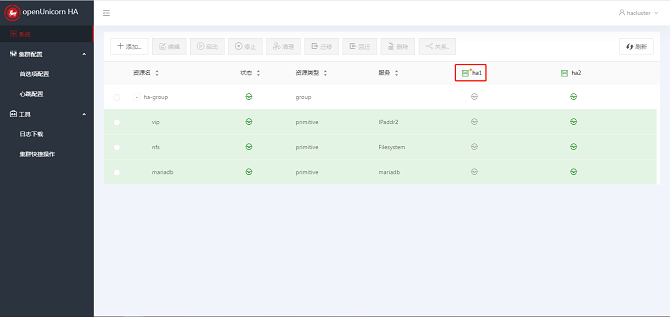

Node Operation Floating Area

By default, the node operation floating area is collapsed. When you click a node in the heading of the resource node list, the node operation area is displayed on the right, as shown in the preceding figure. This area consists of the collapse button, the node name, the stop button, and the standby button, and provides the stop and standby operations. Click the arrow in the upper left corner of the area to collapse the area.

Preference Settings

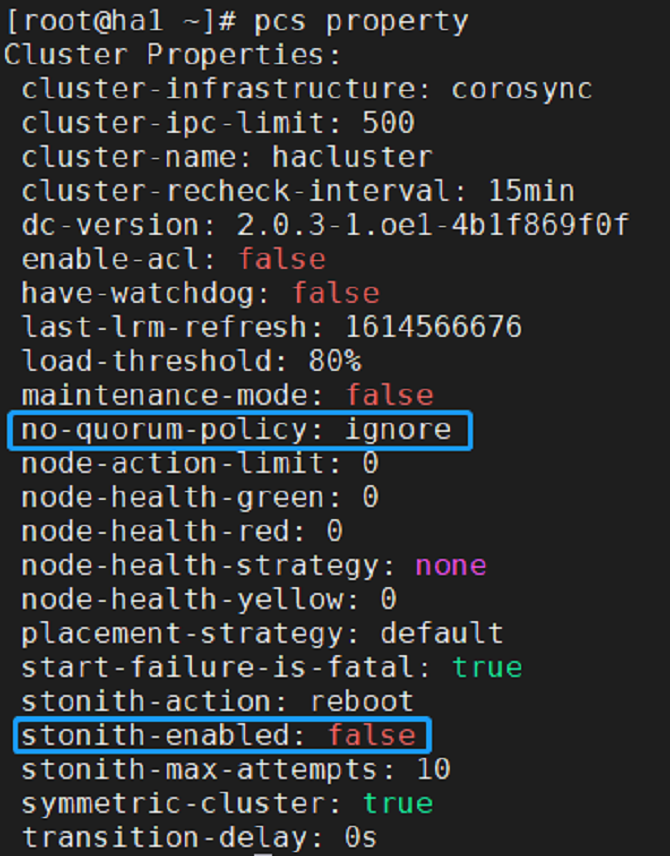

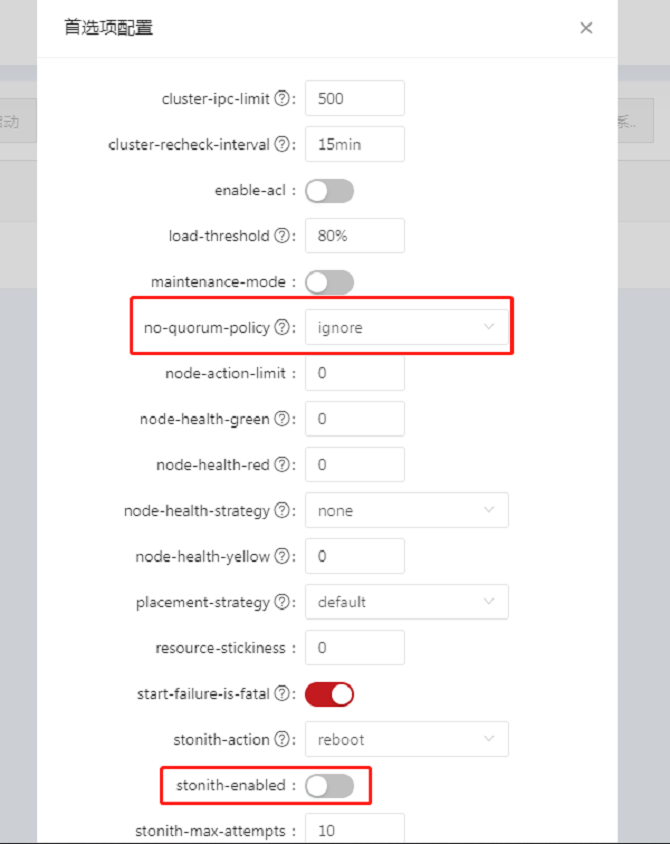

The following operations can be performed using command lines. The following is an example. For more command details, run the pcs --help command.

# pcs property set stonith-enabled=false

# pcs property set no-quorum-policy=ignore

Run pcs property to view all settings.

- Click Preference Settings in the navigation bar, the Preference Settings dialog box is displayed. Change the values of No Quorum Policy and Stonith Enabled from the default values to the values shown in the figure below. Then, click OK.

Adding Resources

Adding Common Resources

Click Add Common Resource. The Create Resource dialog box is displayed. All mandatory configuration items of the resource are on the Basic page. After you select a Resource Type on the Basic page, other mandatory and optional configuration items of the resource are displayed. When you type in the resource configuration information, a gray text area is displayed on the right of the dialog box to describe the current configuration item. After all mandatory parameters are set, click OK to create a common resource or click Cancel to cancel the add operation. The optional configuration items on the Instance Attribute, Meta Attribute, or Operation Attribute page are optional. The resource creation process is not affected if they are not configured. You can modify them as required. Otherwise, the default values are used.

The following uses the Apache as an example to describe how to add an Apache resource.

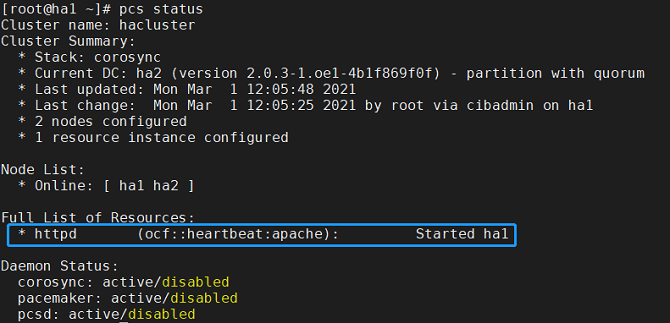

# pcs resource create httpd ocf:heartbeat:apache

Check the resource running status:

# pcs status

- Add the Apache resource:

- If the following information is displayed, the resource is successfully added:

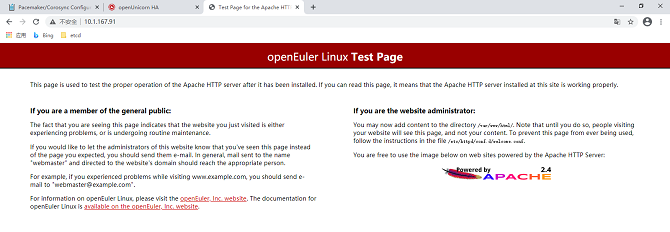

- The resource is successfully created and started, and runs on a node, for example, ha1. The Apache page is displayed.

Adding Group Resources

Adding group resources requires at least one common resource in the cluster. Click Add Group Resource. The Create Resource dialog box is displayed. All the parameters on the Basic tab page are mandatory. After setting the parameters, click OK to add the resource or click Cancel to cancel the add operation.

- Note: Group resources are started in the sequence of child resources. Therefore, you need to select child resources in sequence.

If the following information is displayed, the resource is successfully added:

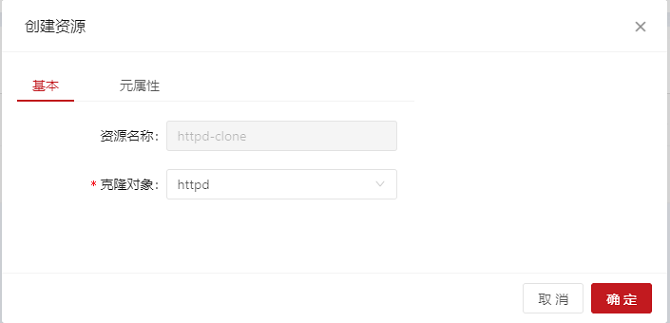

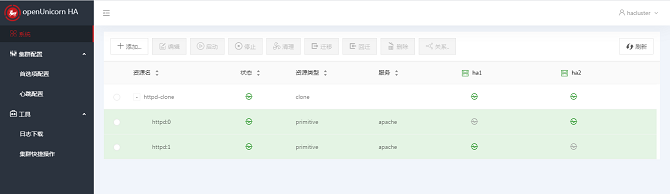

Adding Clone Resources

Click Add Clone Resource. The Create Resource dialog box is displayed. On the Basic page, enter the object to be cloned. The resource name is automatically generated. After entering the object name, click OK to add the resource, or click Cancel to cancel the add operation.

If the following information is displayed, the resource is successfully added:

Editing Resources

- Starting a resource: Select a target resource from the resource node list. The target resource must not be running. Start the resource.

- Stopping a resource: Select a target resource from the resource node list. The target resource must be running. Stop the resource.

- Clearing a resource: Select a target resource from the resource node list. Clear the resource.

- Migrating a resource: Select a target resource from the resource node list. The resource must be a common resource or a group resource in the running status. Migrate the resource to migrate it to a specified node.

- Migrating back a resource: Select a target resource from the resource node list. The resource must be a migrated resource. Migrate back the resource to clear the migration settings of the resource and migrate the resource back to the original node. After you click Migrate Back, the status change of the resource item in the list is the same as that when the resource is started.

- Deleting a resource: Select a target resource from the resource node list. Delete the resource.

Setting Resource Relationships

Resource relationships are used to set restrictions for the target resources. There are three types of resource restrictions: resource location, resource collaboration, and resource order.

- Resource location: sets the running level of the nodes in the cluster for the resource to determine the node where the resource runs during startup or switchover. The running levels are Primary Node and Standby 1 in descending order.

- Resource collaboration: indicates whether the target resource and other resources in the cluster run on the same node. Same Node indicates that this resource must run on the same node as the target resource. Mutually Exclusive indicates that this resource cannot run on the same node as the target resource.

- Resource order: Set the order in which the target resource and other resources in the cluster are started. Front Resource indicates that this resource must be started before the target resource. Follow-up Resource indicates that this resource can be started only after the target resource is started.

HA MySQL Configuration Example

- Configure three common resources separately, then add them as a group resource.

Configuring the Virtual IP Address

On the home page, choose Add > Add Common Resource and set the parameters as follows:

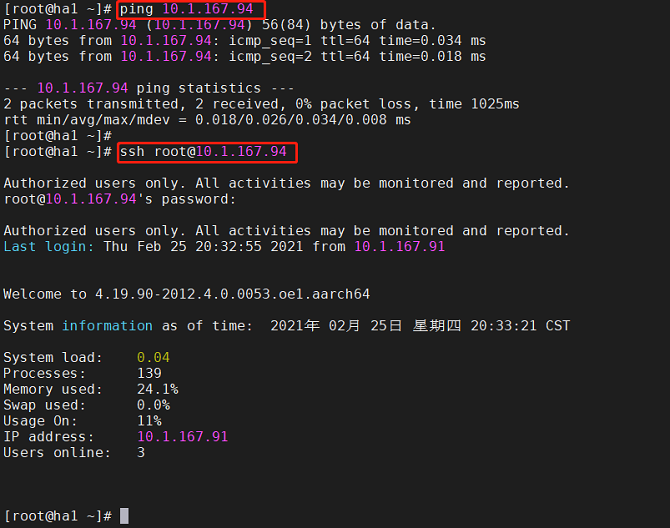

- The resource is successfully created and started and runs on a node, for example, ha1. The resource can be pinged and connected, and allows various operations after login. The resource is switched to ha2 and can be accessed normally.

- If the following information is displayed, the resource is successfully added:

Configuring NFS Storage

- Configure another host as the NFS server.

Install the software packages:

# yum install -y nfs-utils rpcbind

Run the following command to disable the firewall:

# systemctl stop firewalld && systemctl disable firewalld

Modify the /etc/selinux/config file to change the status of SELINUX to disabled.

# SELINUX=disabled

Start the services:

# systemctl start rpcbind && systemctl enable rpcbind

# systemctl start nfs-server && systemctl enable nfs-server

Create a shared directory on the server:

# mkdir -p /test

Modify the NFS configuration file:

# vim /etc/exports

# /test *(rw,no_root_squash)

Reload the service:

# systemctl reload nfs

Install the software packages on the client. Install MySQL first to mount the NFS to the path of the MySQL data.

# yum install -y nfs-utils mariadb-server

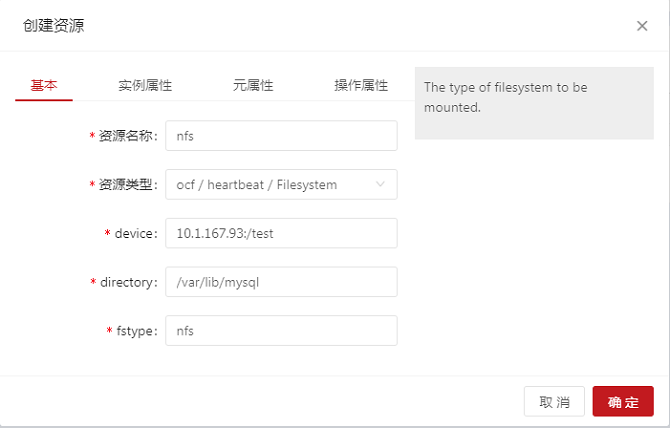

On the home page, choose Add > Add Common Resource and configure the NFS resource as follows:

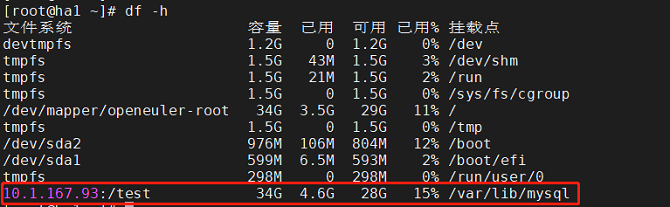

- The resource is successfully created and started and runs on a node, for example, ha1. The NFS is mounted to the /var/lib/mysql directory. The resource is switched to ha2. The NFS is unmounted from ha1 and automatically mounted to ha2.

- If the following information is displayed, the resource is successfully added:

Configuring MySQL

On the home page, choose Add > Add Common Resource and configure the MySQL resource as follows:

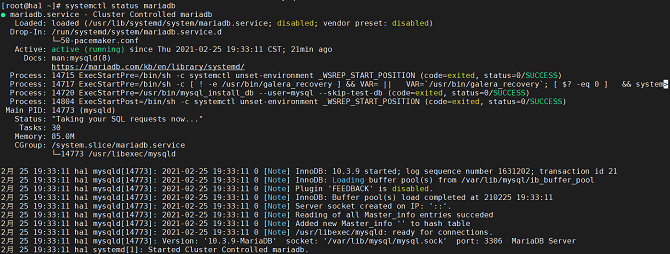

- If the following information is displayed, the resource is successfully added:

Adding the Preceding Resources as a Group Resource

- Add the three resources in the resource startup sequence.

On the home page, choose Add > Add Group Resource and configure the group resource as follows:

- The group resource is successfully created and started. If the command output is the same as that of the preceding common resources, the group resource is successfully added.

- Use ha1 as the standby node and migrate the group resource to the ha2 node. The system is running properly.

Quorum Device Configuration

Select a new machine as the quorum device. The current software package does not support the service test using systemct. Use pcs to start and stop the service. The procedure is as follows:

Installing Quorum Software

- Install corosync-qdevice on a cluster node.

[root@node1:~]# yum install corosync-qdevice

[root@node2:~]# yum install corosync-qdevice

- Install pcs and corosync-qnetd on the quorum device host.

[root@qdevice:~]# yum install pcs corosync-qnetd

- Start the pcsd service on the quorum device host and enable the pcsd service to start upon system startup.

[root@qdevice:~]# systemctl start pcsd.service

[root@qdevice:~]# systemctl enable pcsd.service

Modifying the Host Name and the /etc/hosts File

Note: Perform the following operations on all the three hosts. The following uses one host as an example.

Before using the quorum function, change the host name, write all host names to the /etc/hosts file, and set the password for the hacluster user.

- Change the host name.

hostnamectl set-hostname node1

- Write the IP addresses and host names to the /etc/hosts file.

10.1.167.105 ha1

10.1.167.105 ha2

10.1.167.106 qdevice

- Set the password for the hacluster user.

passwd hacluster

Configuring the Quorum Device and Adding It to the Cluster

The following describes how to configure the quorum device and add it to the cluster.

- The qdevice node is used as the quorum device.

- The model of the quorum device is net.

- The cluster nodes are node1 and node2.

Configuring the Quorum Device

On the node that will be used to host the quorum device, run the following command to configure the quorum device. This command sets the model of the quorum device to net and configures the device to start during boot.

[root@qdevice:~]# pcs qdevice setup model net --enable --start

Quorum device 'net' initialized

quorum device enabled

Starting quorum device...

quorum device started

After configuring the quorum device, view its status. The current status indicates that the corosync-qnetd daemon is running and no client is connected to it. Run the --full command to display the detailed output.

[root@qdevice:~]# pcs qdevice status net --full

QNetd address: *:5403

TLS: Supported (client certificate required)

Connected clients: 0

Connected clusters: 0

Maximum send/receive size: 32768/32768 bytes

Disabling the Firewall

systemctl stop firewalld && systemctl disable firewalld

- Change SELINUX to disabled in the /etc/selinux/config file.

SELINUX=disabled

Authenticate Identities

Authenticate users on the node hosting the quorum device from a hacluster node in the cluster. This allows the pcs cluster to be connected to the qdevice on the pcs host, but does not allow the qdevice on the pcs host to be connected to the pcs cluster.

[root@node1:~] # pcs host auth qdevice

Username: hacluster

Password:

qdevice: Authorized

Adding the Quorum Device to the Cluster

Before adding the quorum device, run the pcs quorum config command to view the current configuration of the quorum device for later comparison.

[root@node1:~]# pcs quorum config

Options:

Run the pcs quorum status command to check the current status of the quorum device. The command output indicates that the cluster does not use the quorum device and the member status of each qdevice node is NR (unregistered).

[root@node1:~]# pcs quorum status

Quorum information

------------------

Date: Wed Jun 29 13:15:36 2016

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 1

Ring ID: 1/8272

Quorate: Yes

Votequorum information

----------------------

Expected votes: 2

Highest expected: 2

Total votes: 2

Quorum: 1

Flags: 2Node Quorate

Membership information

----------------------

Nodeid Votes Qdevice Name

1 1 NR node1 (local)

2 1 NR node2

Add the created quorum device to the cluster. Note that multiple quorum devices cannot be used in a cluster at the same time. However, a quorum device can be used by multiple clusters at the same time. This example configures the quorum device to use the ffsplit algorithm.

[root@node1:~]# pcs quorum device add model net host=qdevice algorithm=ffsplit

Setting up qdevice certificates on nodes...

node2: Succeeded

node1: Succeeded

Enabling corosync-qdevice...

node1: corosync-qdevice enabled

node2: corosync-qdevice enabled

Sending updated corosync.conf to nodes...

node1: Succeeded

node2: Succeeded

Corosync configuration reloaded

Starting corosync-qdevice...

node1: corosync-qdevice started

node2: corosync-qdevice started

Checking the Configuration Status of the Quorum Device

Check the configuration changes in the cluster. Run the pcs quorum config command to view information about the configured quorum device.

[root@node1:~]# pcs quorum config

Options:

Device:

Model: net

algorithm: ffsplit

host: qdevice

The pcs quorum status command displays the quorum running status, indicating that the quorum device is in use. The meanings of the member status values of each cluster node are as follows:

- A/NA: Whether the quorum device is alive, indicating whether there is heartbeat corosync between qdevice and the cluster. This should always indicate that the quorum device is active.

- V/NV: V is set when the quorum device votes for a node. In this example, both nodes are set to V because they can communicate with each other. If the cluster is split into two single-node clusters, one node is set to V and the other is set to NV.

- MW/NMW: The internal quorum device flag is set (MW) or not set (NMW). By default, the flag is not set and the value is NMW.

[root@node1:~]# pcs quorum status

Quorum information

------------------

Date: Wed Jun 29 13:17:02 2016

Quorum provider: corosync_votequorum

Nodes: 2

Node ID: 1

Ring ID: 1/8272

Quorate: Yes

Votequorum information

----------------------

Expected votes: 3

Highest expected: 3

Total votes: 3

Quorum: 2

Flags: Quorate Qdevice

Membership information

----------------------

Nodeid Votes Qdevice Name

1 1 A,V,NMW node1 (local)

2 1 A,V,NMW node2

0 1 Qdevice

Run the pcs quorum device status command to view the running status of the quorum device.

[root@node1:~]# pcs quorum device status

Qdevice information

-------------------

Model: Net

Node ID: 1

Configured node list:

0 Node ID = 1

1 Node ID = 2

Membership node list: 1, 2

Qdevice-net information

----------------------

Cluster name: mycluster

QNetd host: qdevice:5403

Algorithm: ffsplit

Tie-breaker: Node with lowest node ID

State: Connected

On the quorum device, run the following command to display the status of the corosync-qnetd daemon:

[root@qdevice:~]# pcs qdevice status net --full

QNetd address: *:5403

TLS: Supported (client certificate required)

Connected clients: 2

Connected clusters: 1

Maximum send/receive size: 32768/32768 bytes

Cluster "mycluster":

Algorithm: ffsplit

Tie-breaker: Node with lowest node ID

Node ID 2:

Client address: ::ffff:192.168.122.122:50028

HB interval: 8000ms

Configured node list: 1, 2

Ring ID: 1.2050

Membership node list: 1, 2

TLS active: Yes (client certificate verified)

Vote: ACK (ACK)

Node ID 1:

Client address: ::ffff:192.168.122.121:48786

HB interval: 8000ms

Configured node list: 1, 2

Ring ID: 1.2050

Membership node list: 1, 2

TLS active: Yes (client certificate verified)

Vote: ACK (ACK)

Managing Quorum Device Services

pcs provides the capability of managing quorum device services on the local host (corosync-qnetd). See the following example commands. Note that these commands affect only the corosync-qnetd service.

[root@qdevice:~]# pcs qdevice start net

[root@qdevice:~]# pcs qdevice stop net

[root@qdevice:~]# pcs qdevice enable net

[root@qdevice:~]# pcs qdevice disable net

[root@qdevice:~]# pcs qdevice kill net

When you run the pcs qdevice stop net command, the value of State changes from Connected to Connect failed. When you run the pcs qdevice start net command again, the value of State changes to Connected.

Managing the Quorum Device in the Cluster

You can use the pcs commands to change quorum device settings, disable the quorum device, and delete the quorum device from the cluster.

Changing Quorum Device Settings

Note: To change the net option of quorum device model in the host, run the pcs quorum device remove and pcs quorum device add commands to correctly configure the settings unless the old and new hosts are the same.

- Change the quorum device algorithm to lms.

[root@node1:~]# pcs quorum device update model algorithm=lms

Sending updated corosync.conf to nodes...

node1: Succeeded

node2: Succeeded

Corosync configuration reloaded

Reloading qdevice configuration on nodes...

node1: corosync-qdevice stopped

node2: corosync-qdevice stopped

node1: corosync-qdevice started

node2: corosync-qdevice started

Deleting the Quorum Device

- Delete the quorum device configured on the cluster node.

[root@node1:~]# pcs quorum device remove

Sending updated corosync.conf to nodes...

node1: Succeeded

node2: Succeeded

Corosync configuration reloaded

Disabling corosync-qdevice...

node1: corosync-qdevice disabled

node2: corosync-qdevice disabled

Stopping corosync-qdevice...

node1: corosync-qdevice stopped

node2: corosync-qdevice stopped

Removing qdevice certificates from nodes...

node1: Succeeded

node2: Succeeded

After the quorum device is deleted, check the quorum device status. The following error message is displayed:

[root@node1:~]# pcs quorum device status

Error: Unable to get quorum status: corosync-qdevice-tool: Can't connect to QDevice socket (is QDevice running?): No such file or directory

Destroying the Quorum Device

- Disable and stop the quorum device on the quorum device host and delete all its configuration files.

[root@qdevice:~]# pcs qdevice destroy net

Stopping quorum device...

quorum device stopped

quorum device disabled

Quorum device 'net' configuration files removed