K3s Deployment Guide

Introduction to K3s

K3s is a lightweight Kubernetes distribution that is optimized for edge computing and IoT scenarios. K3s provides the following enhanced features:

- Packaged as a single binary file.

- Uses an SQLite3-based lightweight storage backend as the default storage mechanism and supports etcd3, MySQL, and PostgreSQL.

- Wrapped in a simple launcher that handles complex TLS and options.

- Secure by default with reasonable defaults for lightweight environments.

- Batteries included, providing simple but powerful functions such as local storage providers, service load balancers, Helm controllers, and Traefik Ingress controllers.

- Encapsulates all operations of the Kubernetes control plane in a single binary file and process, capable of automating and managing complex cluster operations including certificate distribution.

- Minimizes external dependencies and requires only kernel and cgroup mounting.

Application Scenarios

K3s is applicable to the following scenarios:

- Edge computing

- IoT

- Continuous integration (CI)

- Development

- Arm

- Embedded Kubernetes

The resources required for running the K3s are relatively small. Therefore, K3s is also applicable to development and testing scenarios. In these scenarios, K3s facilitates function verification and problem reproduction by shortening cluster startup time and reducing resources consumed by the cluster.

Deploying K3s

Step 1 Making Preparations

- Ensure that the host names of the server node and agent node are different.

You can run the hostnamectl set-hostname "host name" command to change the host name.

hostnamectl set-hostname agent

Install K3s on each node using Yum.

The K3s official website provides binary executable files of different architectures and the install.sh script for offline installation. The openEuler community migrates the compile process of the binary file to the community and releases the compiled RPM package. You can run the

yumcommand to download and install K3s.yum install k3s

Step 2 Deploying the Server Node

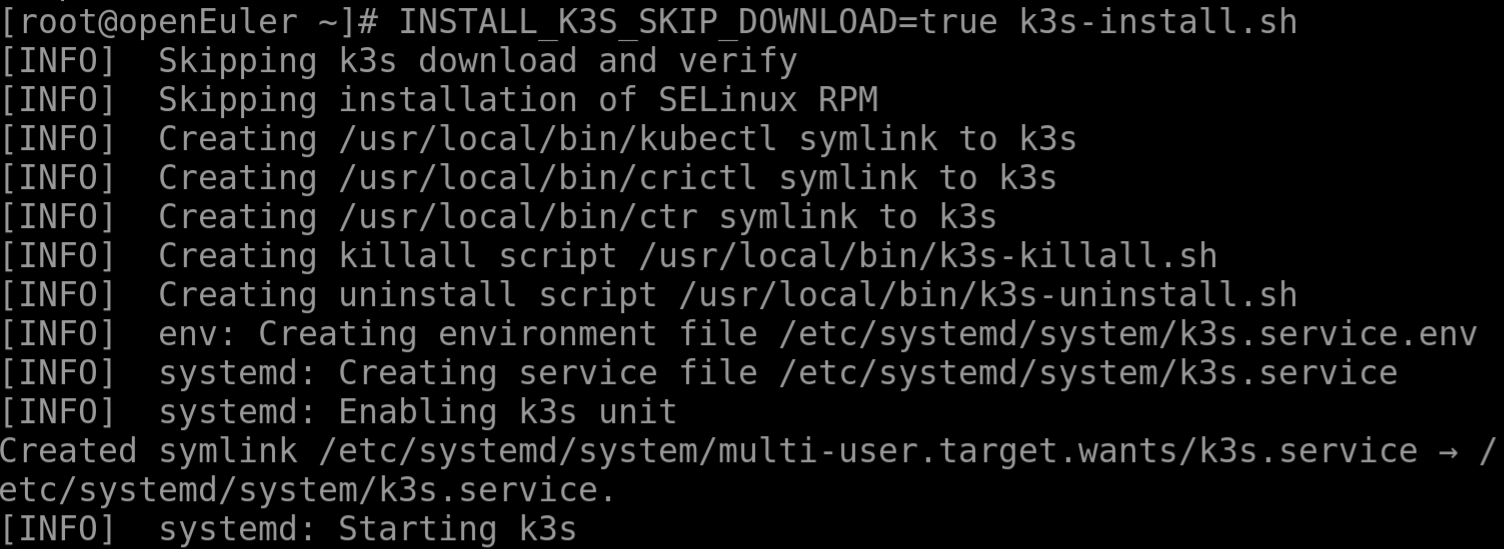

To install K3s on a single server, run the following command on the server node:

INSTALL_K3S_SKIP_DOWNLOAD=true k3s-install.sh

Step 3 Checking Server Deployment

kubectl get nodes

Step 4 Deploying the Agent Node

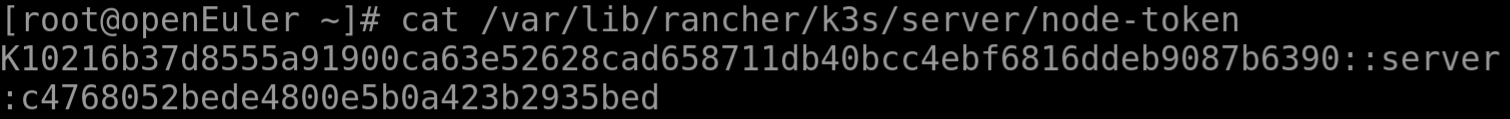

Query the token value of the server node. The token is stored in the /var/lib/rancher/k3s/server/node-token file on the server node.

Note:

Only the second half of the token is used.

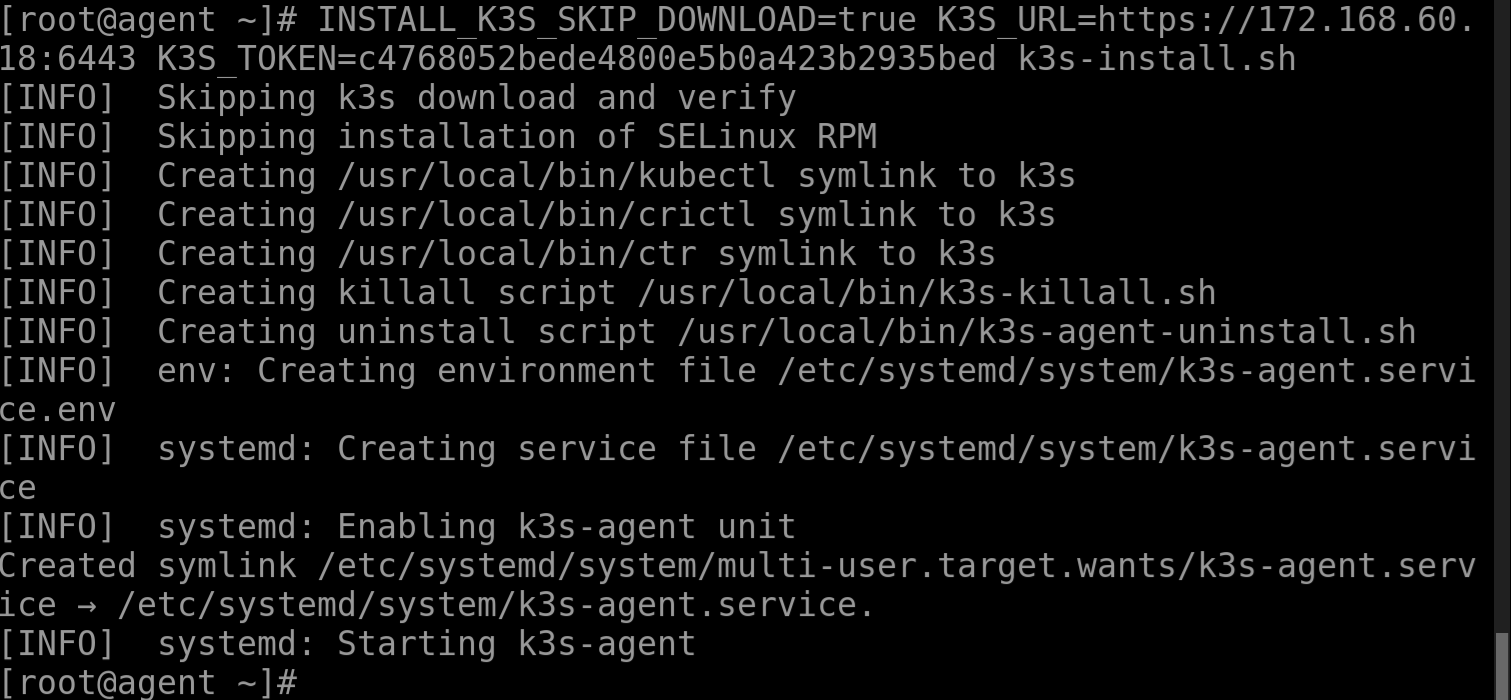

Run the following command on each agent node to open required ports and add agents:

firewall-cmd --add-port=6443/tcp --zone=public --permanent

firewall-cmd --add-port=8472/udp --zone=public --permanent

firewall-cmd --reload

INSTALL_K3S_SKIP_DOWNLOAD=true K3S_URL=https://myserver:6443 K3S_TOKEN=mynodetoken k3s-install.sh

Note:

Replace myserver with the IP address of the server or a valid DNS, and replace mynodetoken with the token of the server node.

Step 5 Checking Agent Deployment

After the installation is complete, run kubectl get nodes on the server node to check whether the agent node is successfully registered.

A basic K3s cluster is set up.

Deploying the First Nginx Service on K3s

Step 1 Creating a Deployment

In Kubernetes, a deployment is used to deploy applications. Create and edit the deployment.yml file as follows:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:alpine

ports:

- containerPort: 80

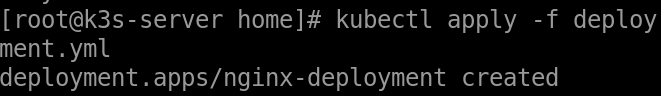

Configure deployment.yml and run the kubectl apply command to create a deployment.

[root@k3s-server home]# kubectl apply -f deployment.yml

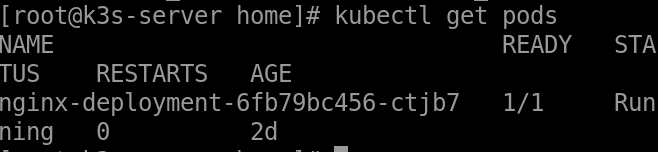

After the deployment is created, check whether the pods are in the running state.

[root@k3s-server home]# kubectl get pods

Step 2 Creating a Service

After the deployment is created, the Nginx service is only deployed. You need to enable the Nginx service to provide services externally. Create and edit the service.yml file as follows:

apiVersion: v1

kind: Service

metadata:

name: nginx-service

spec:

selector:

app: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30080

type: NodePort

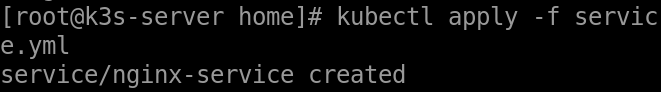

Configure the service.yml file and run the kubectl apply command to create a service.

[root@k3s-server home]# kubectl apply -f service.yml

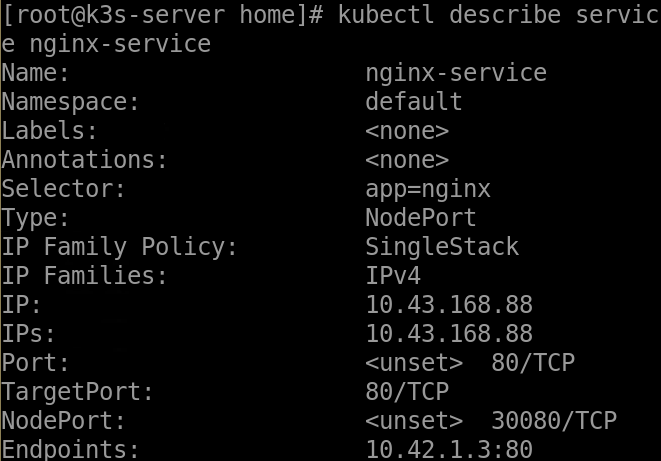

Step 3 Viewing Service Information

[root@k3s-server home]# kubectl describe service nginx-service

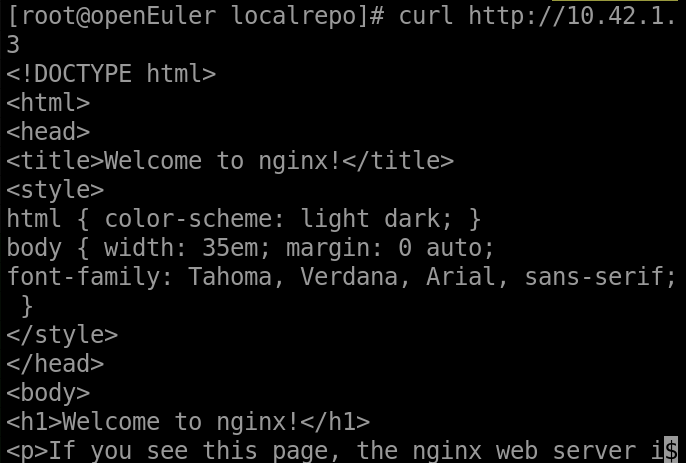

Step 4 Accessing the Service

Run the curl command on the intranet to access the server. The command output shows that the Nginx service has been enabled to provide services externally.

An Nginx service is running in the cluster.

More

For details about how to use K3s, visit the K3s official website at https://rancher.com/docs/k3s/latest/en.