1 Hardware Preparation

Test Mode

Prepare two physical machines (VMs have not been tested) that can communicate with each other.

One physical machine functions as the DPU, and the other functions as the host. In this document, DPU and HOST refer to the two physical machines.

NOTE: In the test mode, network ports are exposed without connection authentication, which is risky and should be used only for internal tests and verification. Do not use this mode in the production environment.

vsock mode

The DPU and HOST are required. The DPU must be able to provide vsock communication through virtio.

This document describes only the test mode usage.

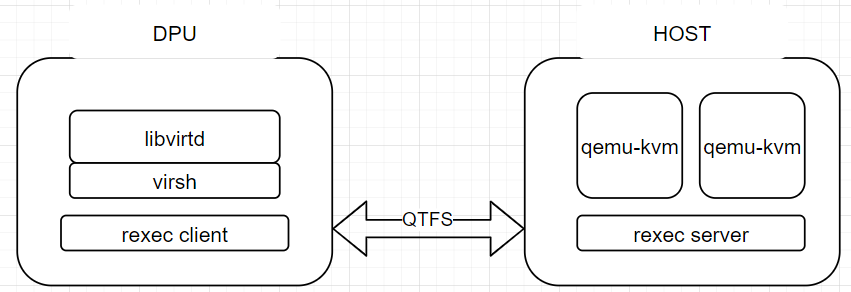

2 libvirt offload architecture

3 Environment Setup

3.1 qtfs File System Deployment

For details, visit https://gitee.com/openeuler/dpu-utilities/tree/master/qtfs.

To establish a qtfs connection, you need to disable the firewall.

3.2 Deploying the udsproxyd Service

3.2.1 Introduction

udsproxyd is a cross-host Unix domain socket (UDS) proxy service, which needs to be deployed on both the host and DPU. The udsproxyd components on the host and dpu are peers. They implement seamless UDS communication between the host and DPU, which means that if two processes can communicate with each other through UDSs on the same host, they can do the same between the host and DPU. The code of the processes does not need to be modified, only that the client process needs to run with the LD_PRELOAD=libudsproxy.so environment variable. As a cross-host Unix socket service, udsproxyd can be used by running with LD_PRELOAD=libudsproxy.so. With the support of qtfs, udsproxyd can also be used transparently. You need to configure the allowlist in advance. The specific operations are described later.

3.2.2 Deploying udsproxyd

Build udsproxyd in the dpu-utilities project:

cd qtfs/ipc

make -j UDS_TEST_MODE=1 && make install

The engine service on the qtfs server has incorporated the udsproxyd feature. You do not need to manually start udsproxyd if the qtfs server is deployed. However, you need to start udsproxyd on the client by running the following command:

nohup /usr/bin/udsproxyd <thread num> <addr> <port> <peer addr> <peer port> 2>&1 &

Parameters:

thread num: number of threads. Currently, only one thread is supported.

addr: IP address of the host.

port: Port used on the host.

peer addr: IP address of the udsproxyd peer.

peer port: port used on the udsproxyd peer.

Example:

nohup /usr/bin/udsproxyd 1 192.168.10.10 12121 192.168.10.11 12121 2>&1 &

If the qtfs engine service is not started, you can start udsproxyd on the server to test udsproxyd separately. Run the following command:

nohup /usr/bin/udsproxyd 1 192.168.10.11 12121 192.168.10.10 12121 2>&1 &

3.2.3 Using udsproxyd

3.2.3.1 Using udsproxyd Independently

When starting the client process of the Unix socket application that uses the UDS service, add the LD_PRELOAD=libudsproxy.so environment variable to intercept the connect API of glibc for UDS interconnection. In the libvirt offload scenario, you can copy libudsproxy.so, which will be used by the libvirtd service, to the /usr/lib64 directory in the chroot directory of libvirt.

3.2.3.2 Using the udsproxyd Service Transparently

Configure the UDS service allowlist for qtfs. The allowlist is the sock file address bound to the Unix socket server. For example, the files of the Unix socket server created by the libvirt VM are in the /var/lib/libvirt directory. In this case, add the directory path to the allowlist in either of the following ways:

- Load the allowlist by using the

qtcfgutility. First compile the utility in qtfs/qtinfo.

Run the following command on the qtfs client:

make role=client

make install

Run the following command on the qtfs server:

make role=server

make install

After qtcfg is installed automatically, run qtcfg to configure the allowlist. Assume that /var/lib/libvirt needs to be added to the allowlist:

qtcfg -x /var/lib/libvirt/

Query the allowlist:

qtcfg -z

Delete an allowlist entry:

qtcfg -y 0

The parameter is the index number listed when you query the allowlist.

- Add an allowlist entry through the configuration file. The configuration file needs to be set before the qtfs or qtfs_server kernel module is loaded. The allowlist is loaded when the kernel modules are initialized.

NOTE: The allowlist prevents irrelevant Unix sockets from establishing remote connections, causing errors or wasting resources. You are advised to set the allowlist as precisely as possible. For example, in this document, /var/lib/libvirt is set in the libvirt scenario. It would be risky to directly add /var/lib, /var, or the root directory.

3.3 rexec Service Deployment

3.3.1 Introduction

rexec is a remote execution component developed using the C language. It consists of the rexec client and rexec server. The server is a daemon process, and the client is a binary file. After being started, the client establishes a UDS connection with the server using the udsproxyd service, and the server daemon process starts a specified program on the server machine. During libvirt virtualization offload, libvirtd is offloaded to the DPU. When libvirtd needs to start the QEMU process on the HOST, the rexec client is invoked to remotely start the process.

3.3.2 Deploying rexec

3.3.2.1 Configuring the Environment Variables and Allowlist

Configure the rexec server allowlist on the host. Put the whitelist file in the /etc/rexec directory, and change the file permission to read-only.

chmod 400 /etc/rexec/whitelist

In the test environment, the allowlist is not mandatory. You can disable the allowlist by deleting the whitelist file and restarting the rexec_server process.

After downloading the dpu-utilities code, go to the qtfs/rexec directory and run make && make install to install all binary files required by rexec (rexec and rexec_server) to the /usr/bin directory.

Before starting the rexec_server service on the server, check whether the /var/run/rexec directory exists. If not, create it.

mkdir /var/run/rexec

3.3.2.2 Starting the Service

You can start the rexec_server service on the server in either of the following ways.

- Method 1: Configure rexec as a systemd service.

Add the rexec.service file to /usr/lib/systemd/system.

Then, use systemctl to manage the rexec service.

Start the service for the first time:

systemctl daemon-reload

systemctl enable --now rexec

Restart the service:

systemctl stop rexec

systemctl start rexec

- Method 2: Manually start the service in the background.

nohup /usr/bin/rexec_server 2>&1 &

3.4 libvirt Service Deployment

3.4.1 Deploying on the HOST

Install the VM runtime and libvirt. (libvirt is installed to create related directories.)

yum install -y qemu libvirt edk2-aarch64 # (required for starting VMs in the Arm environment)

Put the VM image on the HOST. The VM image will be mounted to the client through qtfs and shared with libvirt.

3.4.2 Deploying on the DPU

3.4.2.1 Creating the Chroot Environment

(a) Download the QCOW image from the openEuler official website, for example, openEuler 23.09: https://repo.openeuler.org/openEuler-23.09/virtual_machine_img/.

(b) Mount the QCOW2 image.

cd /root/

mkdir p2 new_root_origin new_root

modprobe nbd maxport=8

qemu-nbd -c /dev/nbd0 xxx.qcow2

mount /dev/nbd0p2 /root/p2

cp -rf /root/p2/* /root/new_root_origin/

umount /root/p2

qemu-nbd -d /dev/nbd0

(c) Now, the root directory of the image is decompressed in new_root_origin. Bind mount new_root to new_root_origin as the mount point for chroot.

mount --bind /root/new_root_origin /root/new_root

3.4.2.2 Installing libvirt

Compile the source code with a patch.

(a) Go to the chroot environment and install the compilation environment and common tools.

yum groupinstall "Development tools" -y

yum install -y vim meson qemu qemu-img strace edk2-aarch64 tar

edk2-aarch64 is required for starting VMs in the Arm environment.

(b) Install the dependency packages required for libvirt compilation.

yum install -y rpcgen python3-docutils glib2-devel gnutls-devel libxml2-devel libpciaccess-devel libtirpc-devel yajl-devel systemd-devel dmidecode glusterfs-api numactl

(c) Download the libvirt-x.x.x source code package https://libvirt.org/sources/libvirt-x.x.x.tar.xz.

(d) Obtain the libvirt patch:

https://gitee.com/openeuler/dpu-utilities/tree/master/usecases/transparent-offload/patches/libvirt.

(e) Decompress the source code package to a directory in the chroot environment, for example, /home, and apply the patch.

(f) Go to the libvirt-x.x.x directory and run the following command:

meson build --prefix=/usr -Ddriver_remote=enabled -Ddriver_network=enabled -Ddriver_qemu=enabled -Dtests=disabled -Ddocs=enabled -Ddriver_libxl=disabled -Ddriver_esx=disabled -Dsecdriver_selinux=disabled -Dselinux=disabled

(g) Complete the installation.

ninja -C build install

3.4.2.3 Starting the libvirtd Service

To use libvirt direct connection aggregation, you need to start the libvirtd service in the chroot environment, which requires the libvirtd service outside the chroot environment to be stopped.

(a) Put the VM jumper script in /usr/bin and /usr/libexec in the chroot environment to replace the qemu-kvm binary file. The jumper script will call rexec to start a remote VM.

NOTE: In the XML file of virsh, set <emulator> under <devices> to qemu-kvm. If you set <emulator> to another value, change it to qemu-kvm or replace the binary file specified by <emulator> with the jumper script. The content of the jumper script also needs to be modified accordingly.

(b) Copy the libudsproxy.so file generated during udsproxyd compilation to the /usr/lib64 directory in the chroot directory. If the udsproxyd service is used by configuring the UDS allowlist of qtfs, you do not need to copy the libudsproxy.so file.

(c) Save the rexec binary file generated during rexec compilation to the /usr/bin directory of the chroot environment.

(d) To configure the chroot mounting environment, you need to mount some directories. Use the following scripts:

- virt_start.sh is the configuration script. In the script, you need to manually change the qtfs.ko path to the path of the compiled .ko file and set the correct HOST IP address.

- virt_umount.sh is the configuration revert script.

(e) The mount directories in the script are based on the examples in this document. You can modify the paths in the script as required.

(f) After the chroot environment is configured, enter the chroot environment and manually start libvirtd.

If qtfs is not configured to use the udsproxyd allowlist, run the following commands:

LD_PRELOAD=/usr/lib64/libudsproxy.so virtlogd -d

LD_PRELOAD=/usr/lib64/libudsproxy.so libvirtd -d

If qtfs is configured to use the udsproxyd allowlist, the LD_PRELOAD prefix is not required:

virtlogd -d

libvirtd -d

To check whether the allowlist is configured, run the following command in another terminal that is not in the chroot environment:

qtcfg -z

Check whether the allowlist contains /var/lib/libvirt.

3.5 VM Startup

After the service is deployed, you can manage the VM life cycle from the DPU.

3.5.1 Defining the VM

(a) Place the VM boot image in a directory on the HOST, for example:

/home/VMs/Domain_name

(b) Use qtfs to mount the directory to the DPU.

mount -t qtfs /home/VMs /home/VMs

(c) In the XML file, /home/VMs/Domain_name is used as the boot image. In this way, the same image file is presented to the DPU and HOST (Domain_name is the VM domain).

(d) Check whether <emulator> in the XML file points to the jumper script.

(e) Define the VM.

virsh define xxx.xml

3.5.2 Starting the VM

virsh start domain

4 Environment Reset

Some libvirt directories are shared between the DPU and the HOST. Therefore, you need to unmount these directories before uninstalling the environment. Generally, stop the libvirtd and virtlogd processes and run the virt_umount.sh script. If a VM is running on the HOST, stop the VM before unmounting the directories.

5 Common Errors

libvirt compilation failure: Check whether the dependency packages are installed. If an external directory or HOST directory is mounted to the chroot environment, the compilation may fail. In this case, unmount the directory first.

qtfs mounting failure: The engine process on the server is not started or the firewall is not disabled. As a result, the qtfs connection fails.

VM definition failure: Check whether the emulator in the XML file points to the jumper script, whether the VM image has been mounted to the DPU through qtfs, and whether the path is the same as that on the HOST.

VM startup failure: Check whether the libvirtd and virtlogd services are started, whether the rexec service is started, whether the jumper process is started, and whether an error is reported when qemu-kvm is started.